The history of computing is akin to a digital renaissance, characterized by a series of transformative innovations that have reshaped society and propelled humanity into the information age. From the abacus to artificial intelligence, the computing evolution represents a journey of ingenuity, creativity, and relentless pursuit of progress.

The story begins thousands of years ago with the invention of the abacus, a simple counting device used by ancient civilizations to perform basic arithmetic operations. While rudimentary in design, the abacus laid the foundation for computational thinking and served as a precursor to more sophisticated computing technologies.

The 19th century witnessed the emergence of mechanical calculators and early computing machines, pioneered by visionaries like Charles Babbage and Ada Lovelace. Babbage’s Analytical Engine, conceived in the 1830s, introduced the concept of programmable computation, while Lovelace’s pioneering algorithms foreshadowed the principles of modern computer programming.

The 20th century saw exponential growth in computing power and technological innovation. The invention of electronic computers in the mid-20th century marked a paradigm shift in computational capabilities, enabling faster and more efficient data processing. The ENIAC, unveiled in 1946, was among the first electronic general-purpose computers, laying the groundwork for the digital revolution to come.

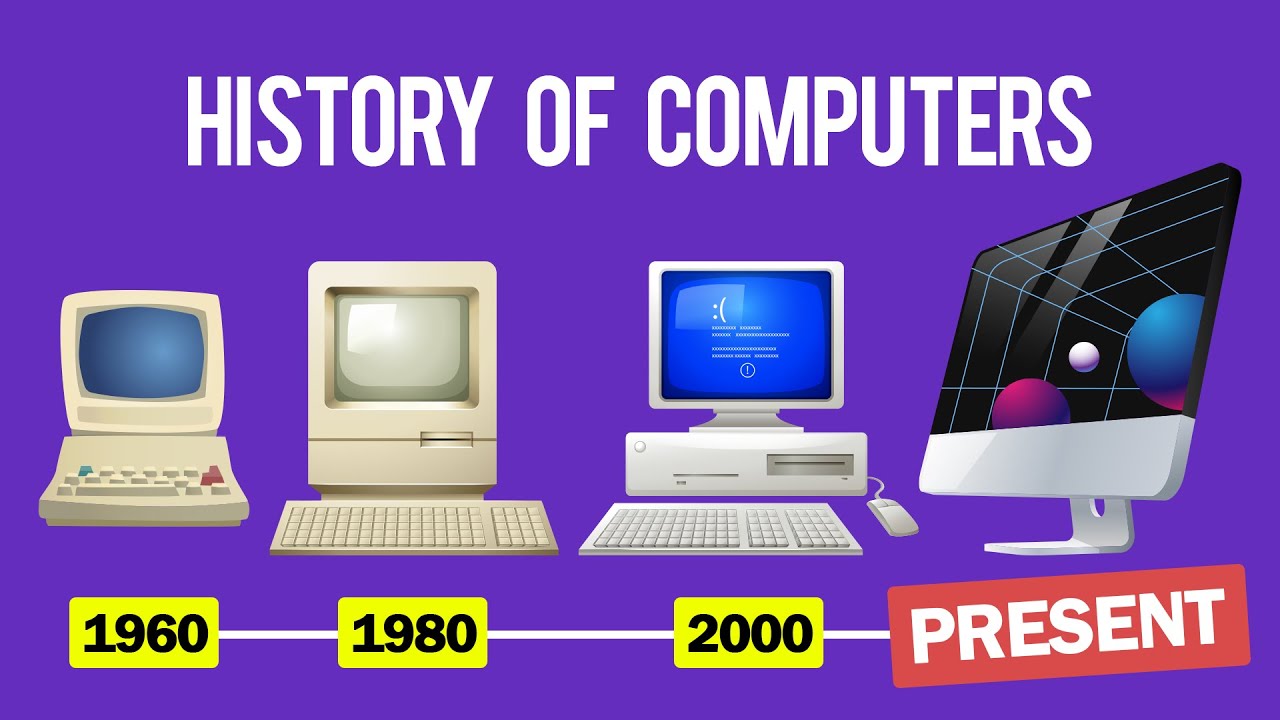

The subsequent decades witnessed rapid advancements in computer hardware and software, driven by breakthroughs in semiconductor technology and the development of integrated circuits. The miniaturization of computers led to the rise of personal computing in the 1970s and 1980s, with iconic machines like the Apple II and IBM PC making computing accessible to individuals and businesses alike.

The advent of the internet in the late 20th century transformed computing from a localized tool to a global network of interconnected systems. The World Wide Web, developed by Tim Berners-Lee in the early 1990s, revolutionized the way information is accessed and shared, ushering in a new era of communication and collaboration.

In the 21st century, computing has become increasingly pervasive, with technologies like artificial intelligence, cloud computing, and big data analytics driving innovation across industries. From healthcare to finance to transportation, computers are playing a central role in shaping the future of society, unlocking new opportunities and addressing complex challenges.

As we stand on the cusp of a new digital renaissance, the evolution of computing continues to unfold, promising even greater advancements and discoveries in the years to come. By understanding the rich tapestry of computing evolution, we gain insight into the forces driving progress and the boundless potential of human ingenuity in the digital age